In today’s interconnected world, the advancement of Edge AI has revolutionized the way we process and analyze data. Edge AI brings intelligence closer to the device, enabling real-time decision making and reducing the need for centralized processing. However, one of the often overlooked aspects of Edge AI is the importance of efficient data movement.

Efficient data movement can have a significant impact on being able to achieve the full throughput and efficiency out of systems. Edge devices cannot afford to wait and solve any bottlenecks that could occur from parallel processing. With Edge AI, everything is distributed and deterministic latency becomes critical to process data locally and efficiently.

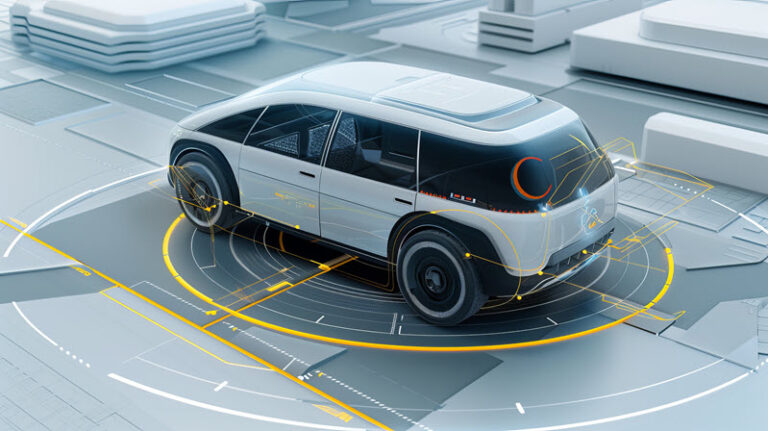

Effective data movement is critical to edge devices, which have limited computational resources, memory bandwidth, and energy constraints but need instantaneous decision-making capabilities. For example, in autonomous vehicles, processing all the cameras, sensors, lidars at the edge can result in faster responses and possibly preventing an accident.

MIPS’ architecture enables a tailored solution with tight integration of embedded CPUs and the overall SoC architecture, handling the data movement and memory balancing to eliminate bottlenecks. MIPS cores are designed for simplicity and efficiency, enabling compact code sizes for edge applications, helping to optimize storage and enhance overall system performance, including deterministic latency across end-to-end systems.

The cores can be easily and coherently integrated alongside other processors, accelerators and compute subsystems, while allowing for customization for unique requirements of embedded applications. Tighter integration translates into fewer bottlenecks and lower overhead of sharing and moving data.

At the core of enabling better data movement is MIPS’ unique multi-threading capabilities, which enable multiple software threads to efficiently execute in parallel. Read our multi-threading whitepaper.

With more modern approaches to multi-threading, hardware can alternate operations from different threads of execution on each processor cycle with no overhead, meaning every CPU cycle can have an operation executing. Additionally, in a superscalar CPU architecture, where multiple concurrent operations are supported even for a single thread, the hardware can be executing multiple instructions for two or more different threads even in the same cycle. This is referred to as simultaneous multi-threading (SMT).

Multi-threading can bring significant cost and power advantages in embedded cores, relative to instantiating additional cores. Overall throughput and compute requirements for the system can be handled with less overall processor resources.

Effective data movement is a critical enabler of responsiveness, scalability and power efficiency. By optimizing the flow of data between edge devices and CPUs, organizations can unlock the full potential of distributed intelligence, empowering a wide range of applications across automotive EV and software defined vehicles, computer vision-based AI systems, and enterprise compute and networking systems. As Edge AI continues to evolve, so too will the importance of data movement in realizing new innovations.