Introduction

The rise of AI has unleashed an insatiable demand for faster, smarter, and more scalable data center networks. As GPUs and accelerators become the backbone of AI training and inference, traditional network designs struggle to keep up with the explosive growth in data and computational intensity. The network is no longer just for connectivity but a critical performance driver for compute.

This paper boldly advocates converging scale-up and scale-out back-end networks with simplified, unified management, a game-changing approach for modern AI data centers with GPU/accelerator PoDs (collection of server nodes). By tailoring intra-PoD (scale-up) and inter-PoD (scale-out) architectures to AI’s unique demands, organizations can unlock transformational improvements in speed, efficiency, and reliability with adaptability to different use-cases.

Through insights drawn from large-scale deployments, we explore how this converged network strategy optimizes performance for AI/ML workloads, ensures predictable QoS, enhances security, and lays the groundwork for next-generation AI infrastructure.

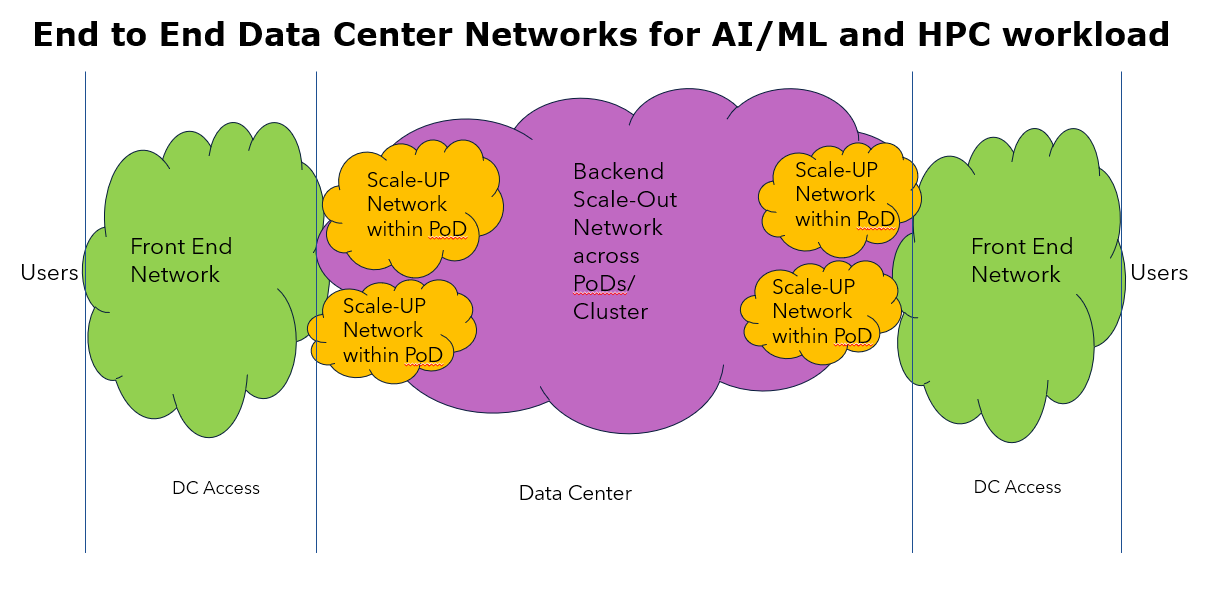

Data Center Network Architecture Overview

Below are the key components of End-to-End Data Center network architecture:

- Front-end Network (Existing Data Center Fabric)

The front-end network handles tasks that connect users, datasets, and services to GPU and AI accelerator resources. Its role is critical for seamless data flow and user interaction in AI-driven environments.

- User access to GPU and AI accelerator resources

- Dataset ingestion and preprocessing

- Model serving and inference requests

- Management and monitoring traffic

- Inter-service communication

The front-end network’s quality of service (QoS) must adapt to the varied demands of AI workloads while maintaining flexibility and reliability.

- Flexible Bandwidth Allocation

- Support for burst traffic (up to 10x baseline)

- Standard TCP/IP optimization techniques

- Multiple traffic classes (e.g., management, user data, inference)

Example Use Case

A machine learning platform may handle traffic for 10,000 users, from data scientists uploading training datasets to services requesting real-time model inference. The front-end network ensures smooth and efficient handling of such diverse demands.

- Scale Up and Scale Out Back-end Networks (GPU and AI Accelerator Fabric)

The back-end network, also known as the GPU and AI accelerator fabric, is the cornerstone of high-performance inter-device communication for AI-driven data centers. Its architecture supports the unique demands of distributed AI workloads, such as training and inference. Below is an overview of its key functions and features:

Responsibilities of the Back-end Network:

- Distributed memory sharing

- Gradient Aggregation for large language models

- Collective communications in Multi-Node AI training

- RDMA (Remote direct memory access) and RMA (direct load/store) operations requiring guaranteed delivery

- Precise congestion control

Back-end Network QoS Specifications:

- Guaranteed Bandwidth: ~400/800 Gbps per device

- Low Latency: ~2 μs

- Lossless Transmission

- Predictable performance under loaded conditions

The typical technical requirements include ultra-low latency: <1 μs (Scale Up) and < 10 μs (Scale Out), guaranteed bandwidth up to 800 Gbps per device, lossless transmission.

Example Workload:

Training a 175-billion parameter model across 1,024 GPUs or more involves:

- Handling approximately 350 GB of gradient data per training iteration.

- Delivering deterministic latency and bandwidth to ensure synchronized performance.

Separation of Scale-Up and Scale-Out Networks

To optimize performance, the back-end network is divided into two specialized segments:

- Scale-Up Network

- Focuses on accelerator-to-accelerator communication within a PoD (up to 1,024 accelerators).

- Leverages direct load/store memory access for ultra-low latency operations.

- Scale-Out Network

- Facilitates communication across clusters of thousands of accelerators.

- Utilizes RDMA for efficient data transfer across PoDs or accelerators.

Benefits of Separation:

- Performance Optimization

- Reduces training time for large models by up to 40%.

- Decreases gradient synchronization latency by 65%.

- Eliminates performance variation in multi-tenant setups.

- Network Segmentation and Specialization

- Fixed packet sizes tailored for GPU/AI memory transfers.

- Deterministic latency paths for predictable performance.

- Specialized congestion control algorithms for enhanced efficiency.

- Enhanced Security

- Physical isolation of networks for improved security.

- Independent authentication domains and security policies.

- Controlled data flows to safeguard sensitive model training data.

- Differentiated Quality of Service (QoS)

- Specialized QoS treatment for intra-PoD (scale-up) and inter-PoD (scale-out) traffic as needed.

- Priority and credit-based congestion control mechanisms as needed.

- Guaranteed bandwidth and zero packet loss for critical workloads.

The separate and specialized scale-up and scale-out networks are pivotal for enabling scalable, efficient, and secure AI infrastructure in modern data centers.

Unified Implementation Strategy

Designing an efficient and scalable back-end network requires meticulous consideration of hardware and software components to meet the demands of AI workloads. Below are key aspects of the flexible implementation:

- Converged Fabric Technology

- High-Performance Interconnects Options: Leverage RDMA/RMA, HDR/NDR, RoCE v2 etc., to achieve high bandwidth and low latency for AI workloads.

- Topology Design: Use non-blocking CLOS or single-layer topologies to maximize data throughput and minimize congestion as needed.

- Switching Technology Options: Employ dedicated Ethernet-based or PCIe-based switches optimized for GPU and AI accelerator traffic as needed.

- Configuration Best Practices

- Optimized RDMA Settings: Configure RDMA for high-speed, low-latency data transfer with fine-tuned flow control mechanisms.

- Flow Control Options: Implement PFC (Priority-based Flow control) or credit-based flow control as needed to avoid packet loss during high traffic bursts, ensuring smooth operation under load.

- Congestion Management: Use Explicit Congestion Notification (ECN) or signal-based controls to maintain network stability.

- Dynamic Connected Transport (DCT): Adopt DCT for scalable connections in distributed environments, balancing performance and resource usage.

- Routing Strategies

- Converged Routing: Use efficient IP routing algorithms or direct routing techniques per destination to streamline data movement across nodes.

- Load Balancing: Deploy Equal-Cost Multi-Path (ECMP) or packet spraying to distribute traffic evenly and prevent bottlenecks.

Cost-Benefit Consideration

While deploying separate scale-up and scale-out networks involves additional CAPEX, the return on investment is significant due to:

- Enhanced performance and reduced downtime.

- Improved resource utilization and operational efficiency.

- Greater flexibility to adapt to evolving AI workloads and infrastructure requirements.

Future Proofing and Scalability

The architecture aligns with emerging trends such as:

- Next-Gen GPU and AI Accelerator Architectures:

- Evolving Datacenter GPUs, custom ASICs

- Increased on-chip bandwidth requirements

- Advanced Interconnects:

- Adoption of CXL (Compute Express Link) integration for memory extension and pooling

- UALink and Ultra Ethernet (UEC) for multi-GPU systems as high-speed interconnects

- Evolving AI Workloads:

- Preparedness for trillion-parameter models and heterogeneous computing setups.

- Regulatory Compliance:

- Ensures data sovereignty and adherence to industry-specific security standards.

By implementing this strategy, organizations can build robust, scalable, and future-ready AI data center networks tailored to the growing complexity of modern AI workloads.

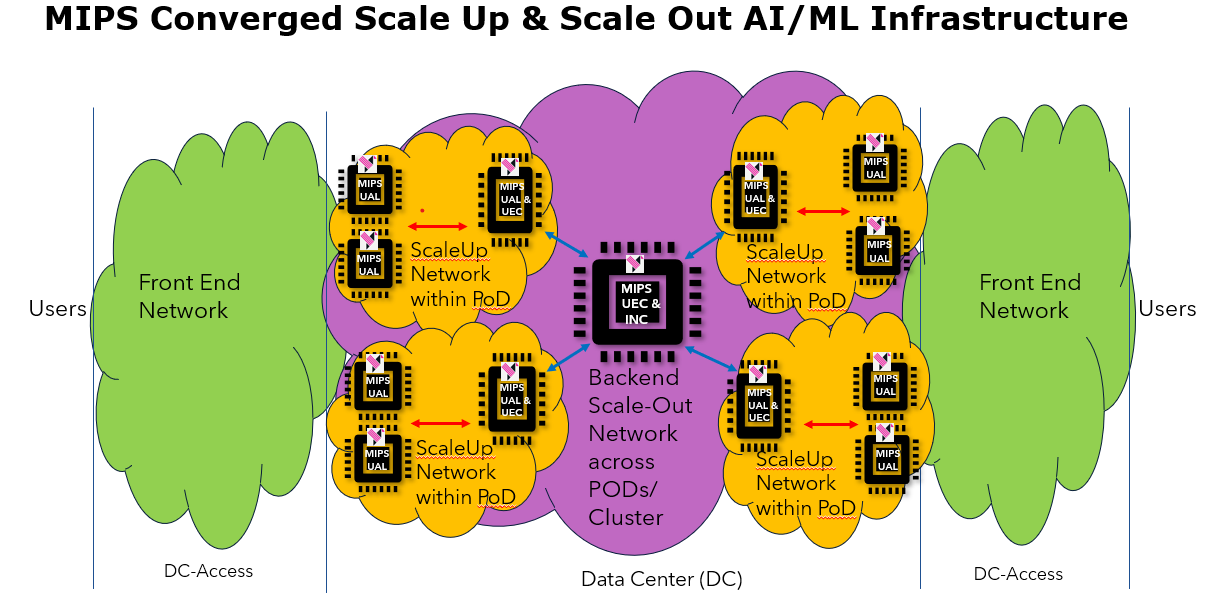

Advancing AI Connectivity: Ultra Accelerator Link and Ultra Ethernet Integration

The Ultra Accelerator Link (UALink) is a groundbreaking initiative aimed at redefining connectivity in AI data centers, particularly for scale-up scenarios. Spearheaded by an industry-wide consortium (including MIPS), UALink focuses on delivering high-speed, low-latency interconnects. It is specifically designed to facilitate seamless communication between AI accelerators and switches within tightly coupled AI computing Pods, addressing the rigorous demands of AI/ML workloads.

Complementing UALink is the work of the Ultra Ethernet Consortium (UEC), which enhances Ethernet technologies to better serve scale-out scenarios. UEC focuses on optimizing Ethernet for large-scale AI and HPC workloads, ensuring high bandwidth and low latency for communication across distributed clusters. Together, unified UALink and UEC provide an open ecosystem for AI infrastructure, offering a viable alternative to proprietary interconnect solutions.

The synergy between UALink’s focus on intra-Pod connectivity and UEC’s emphasis on inter-Pod connectivity with scalability enables a comprehensive approach to AI data center design. This integration ensures flexibility, scalability, and performance, catering to the unique data and traffic characteristics of diverse AI/ML models. By addressing both local and distributed cluster connectivity challenges, these standards are poised to set the foundation for the next generation of AI infrastructure.

“MIPS Converged Scale-Up and Scale-Out AI/ML Infrastructure” as shown in the figure leverages the complementary strengths of UALink and Ultra Ethernet under one architecture. This architecture is designed to adapt seamlessly to a wide range of application needs, from training large-scale models to handling diverse organizational workloads. It offers a robust framework for building scalable and flexible AI systems that can evolve with future demands.

MIPS converged architecture also offers a unified, simple single pane of glass management of all different components of scale-up and scale-out back-end networks. This is very critical for easy operation, administration, and maintenance of data center AI infrastructure.

Conclusion

The distinct but unified scale-up and scale-out network architecture in GPU and AI accelerator-based data centers is critical for optimizing AI infrastructure. This distinction enables predictable performance by addressing the differing requirements of compute-intensive AI workloads and distributed data flow, which are essential for achieving high efficiency and scalability. This architectural approach enhances security by isolating traffic types, simplifying operations through more manageable network configurations, and ensuring future scalability to accommodate the growing demands of AI workloads.

As AI workloads evolve in complexity, the flexibility to adapt the infrastructure to diverse organizational needs, AI/ML model types, and traffic patterns becomes paramount. The implementation of a converged AI infrastructure—such as the MIPS Converged Scale-Up & Scale-Out AI/ML Infrastructure—offers the necessary adaptability to maintain high performance across different use cases. Since the complexity of managing separate scale up and scale out networks is challenging, the converged architecture brings in the needed simplification with the long-term benefits of performance, security, and scalability justifying this approach. This ultimately supports the seamless integration of diverse AI/ML applications.

Integrating the management and control planes for scale-up and scale-out networks in a converged architecture provides significant advantages in simplifying network operations and enhancing overall operational efficiency. A unified plane streamlines monitoring, configuration, and orchestration processes, reducing the operational overhead associated with managing separate networks. This integration enables consistent policy enforcement, accelerates troubleshooting, and enhances real-time adaptability to dynamic AI/ML workloads. Furthermore, a single pane of glass management and unified control plane also fosters better resource utilization, improved security, and scalability by offering a cohesive framework supporting seamless integration of diverse applications and evolving traffic patterns.

Our experience working with the new category of microprocessors, the data processing unit (DPU or xPUs), combined with our deep industry experience, uniquely positions MIPS to build converged scale-up/scale-out architectures. To learn more about MIPS products and how they can help you build more efficient, adaptable, better utilized AI infrastructure platforms, please contact us here: https://mips.com/contact/